Advanced Topics on TFTP

The TFTP protocol has been with us for quite a long time now. It was initially documented by the Internet Engineering Task Force (IETF) standard RFC-783 (1981) and later on its updated version the RFC-1350 (1992). It is considered by many the simplest file transfer protocol, which is the reason it became the favorite choice for embedded devices or anything with limited hardware resources on the need of sending and/or receiving files.

With simplicity in mind it is not hard to understand why the protocol does not have provisions for user authentication. Some people consider TFTP insecure because of this, but taking into account the protocol does not include file listing/removal capabilities either, many other people consider TFTP security is "acceptable" on many scenarios. Even today very famous companies rely on just concealed HTML URLs for their customer download of sensitive material.

The protocol got two major improvements. The first being RFC-2347 introducing the "option negotiation" framework as a way to dynamically coordinate parameters between requester and provider before a particular transfer begins. Right after RFC-2348 introduced "block-size" as one of the negotiated options using the previously defined framework. This way the fixed 512 bytes block size ruled on the original specification was able to be dynamically "negotiated" to higher values on a per-transfer basis.

Considering the file transfer itself, the protocol appears being surprisingly simple. It uses UDP; even without a complete TCP/IP stack implementation the protocol could work, but TCP is not there guaranteeing the packet delivery. As transport and session control there is instead only a rudimentary block retransmission schema that is triggered in case of a detected missing block or acknowledgment. The data/acknowledgment exchange is performed in lock-step, with only one block sent before to stop transmitting and wait for the corresponding block acknowledgement. This last characteristic (single data/acknowledgment block sequence) is really today's TFTP's Achilles’ heel: TFTP transfer rate is very sensitive to system's latency. Not only does network latency negatively affects TFTP performance; a busy host or client over a low latency network could represent problem as well.

Let's see how the typical TFTP file transfer looks like when watched on a network sniffer (Wireshark)

192.168.20.30 -> 192.168.20.1 TFTP Read Req, File: pxeserva.0\0, Trans typ: octet\0 blksize\0 = 1456\0

192.168.20.1 -> 192.168.20.30 TFTP Option Acknowledgement

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 0

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 1

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 1

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 2

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 2

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 3

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 3

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 4

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 4

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 5

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 5

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 6

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 6

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 7

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 7

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 8

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 8

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 9

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 9

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 10

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 10

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 11

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 11

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 12

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 12

List 1: A typical RFC-1350 file transfer

It is easy to see in List 1 the file request, its option acknowledgment, another acknowledgment (Block: 0), and finally the transfer itself where for every data packet sent the sender synchronously stops and waits for its corresponding acknowledgment.

Since RFC-906 "Bootstrap Loading using TFTP" (1984), passing by Intel's PXE "Preboot Execution Environment" (1999), up to today's version of Microsoft's WDS and derivatives, TFTP has always been the protocol of choice for the early stages of any well known net boot/install strategy. The TFTP code used by the initial PXE load is actually embedded onto the target's Network card. It is this embedded code the one that after an initial DHCP transaction requests to the TFTP server the required bootstrap loader and following files.

Bootstrap loaders are very small files (~20K) that cannot get triggered anyone's anxiety even if the transfer rate is not the best one. But the bootstrap loader has to transfer right away heavier components also by TFTP. This is the moment when the lack of performance begins to be noticeable. Today some TFTP transfers can be very heavy; i.e. Net booting MS Windows 8 involves the TFTP transfer of a 180MB Windows PE component used on its install procedure... This can be considered heavy stuff for a regular RFC-1350 TFTP transfer.

At this point, when today's networks are pretty reliable, when the TFTP server usually resides in the same DHCP server, and this one is usually connected to the same (allow me to say) collision domain the PXE target is connected to (no routing), it results obvious the TFTP original lock-step strategy has room for improvement.

The first attempts on this matter were carried out by TFTP servers implementing a "Windowed" version of the protocol. The basic idea here it is sending N consecutive blocks instead of just one, then stop and wait for the reception of the sequence of the N corresponding acknowledgements before to repeat the cycle. Some people called this "Pipelined" TFTP, I personally consider "Windowed" is a more appropriate term.

192.168.20.30 -> 192.168.20.1 TFTP Read Req, File: pxeserva.0\0, Trans typ: octet\0 blksize\0 = 1456\0

192.168.20.1 -> 192.168.20.30 TFTP Option Acknowledgement

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 0

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 1

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 2

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 3

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 4

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 1

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 2

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 3

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 4

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 5

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 6

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 7

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 8

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 5

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 6

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 7

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 8

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 9

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 10

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 11

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 12

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 9

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 10

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 11

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 12

List 2: A classic RFC-1350 client against a "windowed" TFTP server using window-size=4

In List 2 we see the sliding window of 4 blocks goes from data sender to data receiver in a single batch, next the data sender stops and waits for the sequence of 4 acknowledgments from the data receiver. The first depicted transfer of this 12 block file took 0.122008s the second one 0.112087s. The difference may look small but please consider for the purposes of this particular test I used a very small file.

Without a doubt this first step breaking the lock-step schema represents an improvement but the data sender still have to wait for, and deal with, a lot of unnecessary acknowledgments sent by the unaware data receiver, plus we do not know if the error recovery capabilities might result somehow affected on some clients.

"windowsize" Negotiation

The biggest issue with the windowed approach described so far is the fact that there's no provision for the agreement between Client and Server on the use of this modified TFTP protocol or the classic RFC-1350 protocol. Then from a Server point of view there is no flexible way to support both methods at the same time.

The next obvious step was to define an option for being negotiated under the terms of RFC-2347 before a particular transfer begins. The option's natural name is "windowsize" and it was initially used by Microsoft WDS and derivatives since Vista SP1 and by Serva since v2.0.0.

- Jan-2015: IETF publishes the Standards Track RFC-7440 - TFTP Windowsize Option.

- Apr-2018: Red Hat Enterprise Linux v7.5 included RFC-7440 support and published the corresponding tftp-rfc7440-windowsize.patch for the famous tftpd-hpa/tftp-hpa TFTP Server/Client modules.

- Nov-2018: UEFI EDKII included RFC-7440 support

- There are other implementation in Python, Java, and Go

From a functional point of view when i.e. the MS WDS client is indicated to use this option by the corresponding parameter on the previously retrieved BCD, it will propose to the TFTP server the window-size specified on the BCD parameter but never higher than 64 blocks. The server when negotiates the option can take the proposed value but it can also reply with a value smaller than the one initially proposed.

In the next run we see a transfer where a MS WDS TFTP client negotiates the "windowsize" option against Serva TFTP server. The client initially proposes windowsize=64 but finally accepts Serva's "counter offer" of windowsize=4.

192.168.20.30 -> 192.168.20.1 TFTP Read Request, File: \WIA_WDS\w8_DevPrev\_SERVA_\boot\ServaBoot.wim\0, Transfer type: octet\0, tsize\0=0\0, blksize\0=1456\0, windowsize\0=64\0

192.168.20.1 -> 192.168.20.30 TFTP Option Acknowledgement, tsize\0=189206885\0, blksize\0=1456\0, windowsize\0=4\0

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 0

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 1

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 2

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 3

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 4

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 4

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 5

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 6

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 7

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 8

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 8

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 9

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 10

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 11

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 12

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 12

...

List 3: Negotiated Windowed TFTP Transfer

List 3 shows how the unnecessary acknowledgments were eliminated. The approach is totally backward compatible with pure RFC-1350 clients because if the option "windowsize" is either not negotiated or Serva TFTP server answers windowsize=1, the transfer would takes place undistinguished from a regular single block lock-step RFC-1350 transfer (more on this later).

The recovery capabilities are also very important. In the next run we see them working when we simulate an error; missing block #6 on the first try.

192.168.20.30 -> 192.168.20.1 TFTP Read Request, File: \WIA_WDS\w8_DevPrev\_SERVA_\boot\ServaBoot.wim\0, Transfer type: octet\0, tsize\0=0\0, blksize\0=1456\0, windowsize\0=64\0

192.168.20.1 -> 192.168.20.30 TFTP Option Acknowledgement, tsize\0=189206885\0, blksize\0=1456\0, windowsize\0=4\0

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 0

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 1

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 2

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 3

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 4

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 4

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 5

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 7 <- block #6 is missing

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 8

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 5 <- ack #5; Last correctly rcvd pkt

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 6 <- resyncronized new window 1st pkt

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 7

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 8

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 9

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 9

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 10

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 11

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 12

192.168.20.1 -> 192.168.20.30 TFTP Data Packet, Block: 13

192.168.20.30 -> 192.168.20.1 TFTP Acknowledgement, Block: 13

...

List 4: Negotiated Windowed TFTP Transfer w/errors

List 4 shows that the data block #6 never makes it to the wire on the first try, then as soon as the data receiver detects the sequence error it acknowledges the last data packet correctly received. The data sender then resynchronize the sequence by sending the first data packet of the corresponding next window.

Now, a big question is how to select the right window size? I personally have conducted a series of test always using two Toshiba Tecra, Gigabit-Ethernet connected back-to-back running Vista Business, both with identical hardware features: Core2 duo 2.2Ghz, 4Gig Ram, 7200RPMs HDDs. In one of them the client performing a Windows 8 (Developers Preview) network install on a VMware environment and Serva running as PXE server in the other one. The figures were gathered over the transfer of the 180Mb file ServaBoot.wim. Exactly the same conditions for all the transfers, only changing the parameter "windowsize". The next chart summarizes the results.

| windowsize | Time (s) | Improvement |

|---|---|---|

| 1 | 257 | 0% |

| 2 | 131 | -49% |

| 4 | 76 | -70% |

| 8 | 54 | -79% |

| 16 | 42 | -84% |

| 32 | 38 | -85% |

| 64 | 35 | -86% |

NOTE: windowsize=1 is equivalent to lock-step RFC-1350.

As we can see the improvement is significant. A relative small windowsize=4 gives us a 70% improvement on the transfer ratio compared to plain RFC-1350, and we have error recovery!

Negotiating "windowsize" in a per-transfer basis easily allows dealing with old RFC-1350 clients. If the "windowsize" option is not proposed to the server, then the server transparently adopts the old RFC-1350 mode and the client will not see any difference.

Then scenarios like WDS where there are several TFTP clients loaded at different stages of the procedure can be handled perfectly. The old/small TFTP clients like the ones embedded in the NIC and the first stage bootstrap loaders always transfer small files that can be handled very well by RFC-1350. But when the big guys (i.e. bootmgr.exe) take control trying to move a monster of 180 Megs the Negotiated Windowed mode is invoked and we can reach transfer rates comparable to the rates achieved by SMB/CIFS mapped drives. Negotiated Windowed TFTP is definitely something to seriously consider.

Recently Microsoft has gone even further; Windows 8 bootmgr.exe has incorporated a new negotiated variable "mstfwindow". When a client proposes this variable to the server with a value mstfwindow=31416 that gets responded by the server with mstfwindow=27182. Then a new transfer mode is agreed; In-transfer "variable windowsize", where the client and the server start the transfer using a windowsize=4 and later this value can be adjusted within the transfer.

Note:

"31416" of course is "pi" and "27182" is "e" the base of the natural logarithms, just the Microsoft's "mathematical" way to come to an agreement on a protocol use...

Getting the most out of your Serva TFTP server.

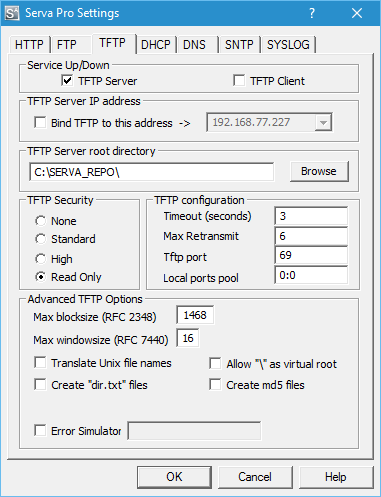

Since Version 2.0.0 Serva uses a new TFTP engine. It implements and runs by default a Negotiated Windowed TFTP server. The Negotiated Windowed TFTP Server means it can natively handle RFC-1350 clients but also parses the window-size option. By default the server limits the value of the requested "windowsize" to 16 blocks, but this limit can be changed or eliminated altogether. The Negotiated Windowed mode can be reduced to RFC-1350 by forcing a limit of windowsize=1.

Serva takes advantage of the negotiated window-size option paying special dividends when tranferring big files like Boot.wim or ServaBoot.wim but the Microsoft TFTP client (bootmgr.exe) proposed value of 64 blocks is big, then we limit the negotiated value to i.e. 16.

If you are serious about error recovery once you defined your TFTP parameters you can simulate transfer errors and analyze your client response while monitoring the TFTP traffic on Serva's Log panel and/or using Wireshark network sniffer.

Serva's TFTP Error Simulation Engine,

simulates errors by generating missing data blocks on fixed, evenly or randomly scattered file locations.

The "fixed" mode permits to analyze errors on i.e. window boundaries, end of the file, etc.

The "even" mode simulates an evenly distributed load of errors.

The "random" mode simulates randomly located errors.

NOTE: Please Do not forget to turn the "Error Simulator Engine" OFF in production !

Final words

Using the data provided in this article you will have a good starting point for effectively use your TFTP server on your next net boot/install endeavor.

Comments or ideas on how to improve the information contained in this document please contact us here.

| Updated | 12/01/2018 |

| Originally published | 05/08/2012 |